Introduction

Website background: My client's website popa.qa is a traditional Chinese Q&A site that lets members ask and answer questions. All answers and questions are storing in the database server.

Step 1: Create The Project

This blog illustrates the problems and the steps to fix that with project source codes. Below is the description of the basic project:

- NodeJS backend (server.js)

- Develop an API (/get-database-records) to simulate getting database records

- Web-components frontend (index.html)

- An example component IndexPage make use of LitElement to render database records

To start the server type: npm start

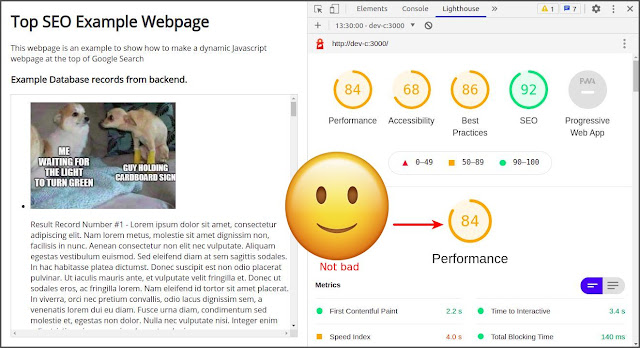

Then I check the webpage speed using the Lighthouse where the Chrome browser provides:

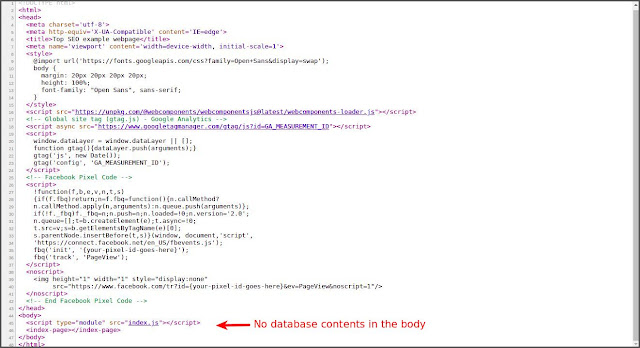

The performance score of 84 is not bad. It is because web-components using shadow DOM to make it very high performance. However, the problem is empty contents. To illustrate this, right-click the webpage and select "View page source". OMG, I see nothing in the HTML contents:

This problem happens for all client-side rendering frameworks include React, Angular, and even jQuery. So this blog is titled "Dynamic Javascript page". It affects SEO significantly. To solve this problem, I chose the Headless Chrome solution.

Step 2: Using Headless Chrome to generate static page

I was lucky because there is Puppeteer: a Headless Chrome library for Node application. It's easy to install, type: npm install --save puppeteer

Add puppeteer to the server.js (at top of the file):

...

const puppeteer = require('puppeteer');

...

To make use of puppeteer, I create an API to trigger it:

... above the app.listen() in server.js

// Call this API to generate the static page.

app.get('/gen-static-pages', async (req, res) => {

const browser = await puppeteer.launch();

const page = await browser.newPage();

await page.goto(`${req.protocol}://${req.get('host')}/index.html`, {waitUntil: 'networkidle2'});

let html = await page.content(); // Headless Chrome returns the HTML contents

fs.writeFileSync('index-static.html', html); // Save the contents to file

await browser.close();

res.end('Static files generated');

});

...

Start the server again: npm start

Open the browser and call the API: http://localhost:3000/gen-static-pages. Then the static file index-static.html should be generated and show the successful text:

Open the public/index-static.html file and see the inside HTML:

Oh, what!? Why there is still empty!? After I check out the LitElement documentation, I figure out there needs a trick.

Step 3: Disabling shadow DOM for some criteria

The empty contents problem is caused by the shadow DOM. But I don't want to disable it anytime. Shadow DOM benefits component-based programming. So I added criteria in the URL query parameter (crawler=1) to let the frontend disable it. Add below function into the public/index.js: // constructor()

createRenderRoot() {

const urlParams = new URLSearchParams(window.location.search);

let isCrawler = urlParams.get('crawler') == 1;

if (isCrawler) {

return this;

} else {

return super.createRenderRoot(); // Same as: return this.attachShadow({mo$

}

}

// connectedCallback()

Then add this parameter at the URL when calling puppeteer:

app.get('/gen-static-pages', async (req, res) => {

// ...

await page.goto(`${req.protocol}://${req.get('host')}/index.html?crawler=1`, {waitUntil: 'networkidle2'});

// ...

});

Then re-run the API to generate the static page and check the contents:

- Restart the server: npm start

- To trigger the API, browse: http://localhost:3000/gen-static-pages

- Browse the result static page: http://localhost:3000/index-static.html

Then "View page source" to see the HTML:

It works! Now the problem of the empty contents is fixed.

Further Optimization

It's now over. It cannot be at the top search ranking. For my client popa.qa, I've implemented additional optimization on the static file generation:

- Changed all the external stylesheets to embedded stylesheets.

- Removed all webfonts download (Google Crawler does not care about font style).

- Removed all Google Analytics and Facebook Pixel libraries (because Google Crawler is just a bot).

- Removed all Youtube iframe tags.

- Refactored the backend server App from NodeJS codes to Cloudflare Workers for highest performance and fewest network latency.

- Setup an individual cloud server to execute Puppeteer periodically via Cron job. The job pre-render all dynamic pages to static pages and upload it all to Cloudflare KV store.

- Inside the Cloudflare Workers server app, it can distinguish the page visitor is a human or a bot through user-agent detection. When it is human, divert to conventional logic. When it's a bot, just return the static pages from KV store.

- Implemented Structured Data Markup for QAPage.

It's a lot of efforts to make it happens, the return is excellent performance and top search ranking:

Not believe? Please try it yourself. Go to Google PageSpeed Insights and enter one of Popa page URL to see the result:

The Project Source Codes: https://github.com/simonho288/dynamic-js-site-seo

Please leave comments if you have any questions, or drop a request for the additional source codes for the Cloudflare Workers codes.

Enjoy! 😃

Harrah's Reno Casino & Hotel - Mapyro

ReplyDeleteFind 서귀포 출장안마 Harrah's Reno 하남 출장마사지 Casino & 포항 출장샵 Hotel (Stateline, NV) location in United States. Get directions, reviews 진주 출장샵 and information for Harrah's Reno 오산 출장안마 Casino & Hotel in